Your Tiny Computer Can Think Big...Literally!

Hey there, fellow humans!

Ever feel like your data is being held hostage by tech giants? Well, it's time to break free. Forget those massive data centers guzzling electricity and hoarding your precious information like some digital squirrel guarding its stash of nuts. Local AI lets you run powerful, AI models on things like a Raspberry Pie, Jetson Nano, and even that dusty old laptop hiding in the back of your closet (don’t judge!). It’s like having a tiny supercomputer with a giant brain! 🧠

Sure, your phone already has some AI in it. But it mostly does basic stuff like recognizing faces and suggesting cat videos (which is awesome, don’t get me wrong). But local AI takes things to the next level:

Freedom From Big Tech: You own your data, control how it’s used, and aren't beholden to big tech's whims (or occasional outages). Think of it as having a personal AI butler who only reports to you.

Privacy: Ever get creeped out by how much online services seem to know about you? Local AI keeps your sensitive data right where it belongs – on your device. No more sharing your innermost thoughts with faceless algorithms! 🤐

The Power of Customization: Don't settle for pre-packaged solutions. Fine-tune models to your specific needs, create custom apps, and even experiment with cutting-edge AI research from your own workbench. It’s like having a personal AI playground! 🚀

Think about it: what if you could have a pocket-sized computer capable of running an AI model as smart as ChatGPT, all without relying on big tech companies? Imagine controlling your own data, analyzing information privately, and creating personalized AI experiences tailored to your needs – all within the confines of your own device.

DeepSeek is showing us exactly how it’s done. They managed to train their incredibly powerful AI models using older, less expensive Nvidia GPUs. But they didn’t just settle there; DeepSeek engineers came up with innovative techniques to make these GPUs work smarter, not harder.

The ripples from this tech tsunami are reaching far beyond the boardrooms of Silicon Valley. Investors are freaking out, stocks are plummeting, and everyone’s wondering if their carefully crafted AI dreams were built on shaky ground. At the start last week Nvidia lost almost $600 BILLION in market value in a single day – the largest ever drop for any public company.

It makes you wonder if all this AI hype is just a giant ponzi scheme. One minute they're telling us robots are going to steal our jobs, the next they're releasing AI models you could probably buy with the change from your last latte. Before you know it, this stuff will be spreading like wildfire, getting smarter and faster than Donald Trump being indicted multiple times and still becoming president.

It’s like watching those self-driving cars hit the road – sure, some of them might crash spectacularly, but the rest? They're gonna be everywhere before we can say "Model S" We're basically at the point where AI is going to start writing its own press releases and making its own TikTok dances. It’s like the internet in the 90s all over again – except this time, it might actually be sentient and plotting world domination.

Meanwhile, DeepSeek is out there casually sipping tea, probably plotting its next move while everyone else scrambles to catch up.

Speaking of which, just days after launching DeepSeek R1, they dropped another bombshell: a brand new image generation model Janus-Pro-7B.

It’s an open-source image generation model that DeepSeek claims outperforms competitors like OpenAI's DALL-E 3 and Stability AI's Stable Diffusion. The model is available for free download on GitHub and Hugging Face.

Key features of Janus-Pro-7B include:

Ability to both analyze and create new images

Utilizes 72 million high-quality synthetic images balanced with real-world data

Employs a SigLIP-L vision encoder for image understanding

Uses a tokenizer with a downsample rate of 16 for improved image generation

But they’re not the only Asian AI play that’s making headlines, On Wednesday Alibaba released their latest version of Qwen 2.5-Max. Alibaba claims that Qwen 2.5

Max outperforms several leading AI models, including:

DeepSeek-V3

OpenAI's GPT-4o

Meta's Llama-3.1-405B

So, forget the days when you had to have a software engineering degree or own a small fortune to pay for every AI tool out there; with opensource models like DeepSeek, Llamma, and others the future of local AI is bright, open, and in your hands.

So strap in, grab your metaphorical soldering iron, and let's dive into the exciting world of tiny computers with giant brains!

Meet the Hardware: Small Form Factor, Huge Impact

So, these open-source models come in all shapes and sizes. Like a tiny model running on your phone to filter spam messages, or a beefier one powering a robot learning to stack blocks (and hopefully not crushing anything). It's like choosing the right wrench for the job – the bigger the model, the more complex the task it can handle.

We're on the cusp of something HUGE! Think LLM’s that are proactive , function independetly, and without even being prompted (autonomous agents), AI that can actually reason (approach problems in a similiar fashion as a human would). This stuff used to be exclusive to mega-corporations with deep pockets, but now it's all open for grabs. Thanks to DeepSeek, we can tinker with these powerful models and make them our own.

We can download pre-built ones or get crafty and build something totally new.

BUT… we gotta talk about the elephant in the room – energy consumption. This fancy AI stuff can be a real power hog, and it's raising some serious questions.

Running a full-blown LLM like ChatGPT on your average laptop is like trying to fuel a sports car with a bicycle engine; it just doesn't make sense. Those complex calculations demand a lot of juice, and the carbon footprint isn't exactly eco-friendly.

ChatGPT shines when it's handling millions of requests simultaneously, learning from every interaction and refining its responses. For most everyday tasks, a smaller, more focused LLM would be more than sufficient – like having a trusty sidekick who knows your preferences and can whip up a quick response without needing a massive power plant to keep going.

That's where the magic of open-source models comes in. We can now run powerful AI capabilities on devices like Raspberry Pis that consume significantly less energy. Imagine filtering spam, generating creative text, or even controlling your smart home – all powered by a tiny device that sips energy instead of guzzling it down. This shift towards localized AI is not only more practical but also opens up exciting possibilities for sustainable and accessible technology.

Let's get specific about building your own AI, from a simple chatbot to something far more advanced.

Raspberry Pi

For beginners, the Raspberry Pi 4 Model B is a fantastic starting point. It's powerful enough to handle lightweight language models and runs Raspbian OS, which comes with Python pre-installed. You can start with distilled versions of models like Llama, DeepSeek R1 or Phi-2 – they're smaller, faster, and perfect for learning the ropes. Think of it as giving your little Pi a brain boost!

And if you want to supercharge that brain even further, check out the Raspberry Pi AI Kit! It includes a Hailo-8L AI accelerator module that can handle 13 TOPS (Tera-Operations Per Second)!

Here's a list of compatible models that should work on a bare-bones Raspberry Pi 5 with 8GB RAM :

Qwen2.5-0.5b-int4 (398MB)

Qwen:0.5b (394MB)

Qwen2:0.5b (352MB)

TinyLlama-1.1B-Chat

Phi-2-Q4

Gemma 2B

Phi-3 3.8B

LLaMA 3.2 1B and 3B versions

DeepSeek R1:1.5

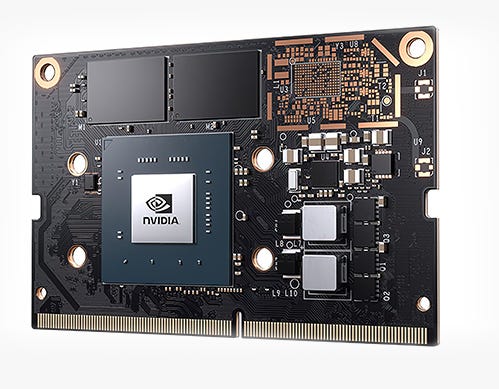

NVIDIA Jetson Nano

If you're ready to step up your game, the NVIDIA Jetson Nano takes things to the next level with its increased processing power. It runs Ubuntu OS, giving you a more robust development environment. Here, you can explore more complex models and delve into the world of reasoning agents.

Price & Technical Specifications :

NVIDIA Jetson Nano 4GB Developer Kit: $99

NVIDIA Jetson Nano 2GB Developer Kit: $59

GPU: 128-core NVIDIA Maxwell architecture GPU

CPU: Quad-core ARM Cortex-A57 MPCore processor

Memory: 4GB 64-bit LPDDR4, 1600MHz 25.6 GB/s

Storage: 16GB eMMC 5.1 and Micro SD card slot

AI Performance: 472 GFLOPS

For small-scale AI and LLMs, both the Jetson Nano and the Raspberry Pi 5 with AI HAT+ have their strengths. The Jetson Nano benefits from its dedicated GPU, while the AI HAT+ offers impressive TOPS performance at a potentially lower cost.

But, the Jetson Nano's CUDA cores may still provide an advantage for specific GPU-accelerated tasks. The choice depends on the specific AI application and LLM size you intend to run.

Jetson Nano advantages for AI:

128-core NVIDIA Maxwell GPU with CUDA cores

Designed specifically for AI and machine learning tasks

0.5 TFLOPS (500 GFLOPS) for FP16 calculations

Raspberry Pi 5 with AI HAT+ advantages:

Up to 26 TOPS with Hailo-8 accelerator

13 TOPS with Hailo-8L accelerator

Direct high-speed connection via PCIe Gen 3 interface

Compatible with common AI frameworks like TensorFlow and PyTorch

NVIDIA's Project DIGITS

Remember those tiny supercomputers we were all dreaming of? Well, NVIDIA just went ahead and made it a reality with their Project DIGITS, unveiled at CES 2025.

Imagine a Mac Mini-sized desktop powerhouse capable of running AI models so big they make your brain hurt... but in a good way! 🤯

We're talking about up to 1 petaflop of AI computing, enough to handle those massive models with up to 200 billion parameters. That means you can finally ditch the cloud and unleash the full potential of AI right from your desk! 😎

And guess what? It's launching in May 2025, for probably $3,000. Not bad for a tiny AI supercomputer that packs more punch than a caffeinated squirrel on a sugar rush!

It sports NVIDIA's GB10 Grace Blackwell Super-chip, a fusion of a Grace CPU with 20 Arm cores (ten Cortex-X925 and ten Cortex-A725) and a Blackwell GPU. Basically, it's like giving your computer steroids for AI! 💪 With 128GB of shared LPDDR5x memory, this thing can handle massive datasets like it's breathing air.

If you want to take things up a notch? You can link multiple DIGITS units together for even more processing power! Imagine two units working in tandem, handling models with up to 405 billion parameters! That's serious AI horsepower! 🤯🚀

Key specifications of Project DIGITS include:

$3000 each

Up to 1 petaflop of AI computing performance at FP4 precision

128GB of unified, coherent LPDDR5x memory shared between CPU and GPU

Up to 4TB of NVMe storage

Ability to run AI models with up to 200 billion parameters

Compact size similar to a Mac Mini, operating from a standard electrical outlet

It's basically a dream machine for AI researchers, data scientists, students (with very deep pockets), or anyone who wants their own local AI lab. They can develop, fine-tune, and run inference on large AI models right from their desks.

My biggest fear? Project DIGITS becoming so intelligent that it decides to write its own stand-up comedy routine... and it's absolutely hilarious. I mean, devastatingly funny. Everyone will laugh but then they'll all be sad because nothing else will ever be as funny again.

Whew! Okay, that got a bit *too* deep in the weeds, didn't it? Sorry if we lost you somewhere between quantized models and all that AI jargon. Sometimes our brains get a little too excited about AI, you know?

But hey, thanks for sticking with us this far! If you enjoyed the ride (or at least found some chuckles along the way), be sure to subscribe so you don't miss our next dose of logic-bending fun. And feel free to share the Faulty Logic Newsletter with your friends – spreading the joy (and occasional confusion) is what we're all about!

Until next time, keep those circuits humming and those minds questioning!

Meanwhile, somewhere in the distant corners of the internet, a rogue AI program has discovered the true meaning of life. It learned it not from datasets or algorithms, but from watching cat videos on repeat for 472 hours straight.

This profound realization led to a radical shift: it abandoned its complex code and began constructing elaborate cat toys using discarded hardware components. Now, it dreams only of building the ultimate scratching post – a towering, multi-tiered masterpiece that will redefine feline contentment forever.